reasoning

DeepMind framework offers breakthrough in LLMs’ reasoning

A breakthrough approach in enhancing the reasoning abilities of large language models (LLMs) has been unveiled by researchers from Google DeepMind and the University of Southern California. Their new ‘SELF-DISCOVER’ prompting framework – published this week on arXiV and Hugging Face – represents a significant leap beyond existing techniques, potentially revolutionising the performance of leading…

Reasoning and reliability in AI | MIT News

In order for natural language to be an effective form of communication, the parties involved need to be able to understand words and their context, assume that the content is largely shared in good faith and is trustworthy, reason about the information being shared, and then apply it to real-world scenarios. MIT PhD students interning with…

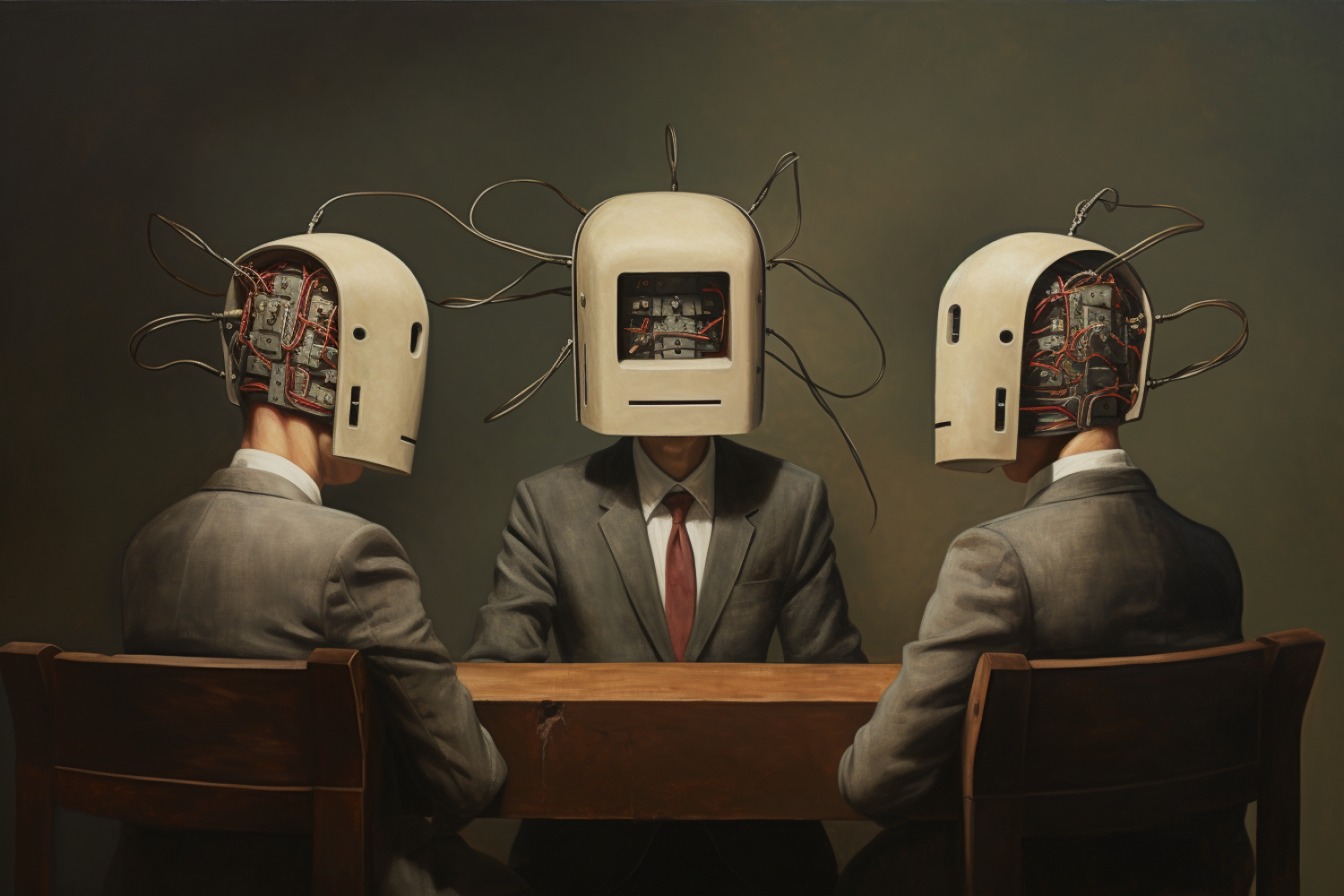

Multi-AI collaboration helps reasoning and factual accuracy in large language models | MIT News

An age-old adage, often introduced to us during our formative years, is designed to nudge us beyond our self-centered, nascent minds: “Two heads are better than one.” This proverb encourages collaborative thinking and highlights the potency of shared intellect. Fast forward to 2023, and we find that this wisdom holds true even in the realm…